Lab Course Humanoid Robots

Robots are versatile systems, that provide vast opportunities for active research and various operations. Humanoid robots, for example, have a human-like body, and thus can act in environments designed for humans. They are able to, e.g., climb stairs, walk through cluttered environments, and open doors. Mobile robots with a wheeled base are designed to operate on flat grounds to perform, e.g., cleaning and service tasks. Robotic arms are able to grasp and manipulate objects.

Participants will work in group of 2 or 3 on one of the possible topics.

At the end of the semester each group will give a presentation and demonstration of their project accompanied by oral moderation. The whole presentation should be approximately 10 minutes long. Every member of the group should present his/her part in the development of the system in a few sentences/slides. When the presentation is complete, each group will be asked a few questions by the HRL staff members or preferably the other students. Everyone is required to be present and to watch the presentation of the other groups.

Aside from the final demonstration, every group is required to submit a lab report. The report is due on the morning before the demonstration. Please describe the task you had to solve, in what ways you approached the solution, what parts your system consists of, special difficulties you may have encountered, and how to compile and use your software. Please include a sufficient number of illustrations. Apart from the content, there are no formal requirements to this document. It is sufficient to submit one lab report per group, it must be pushed to the group's git repository before the lab presentation.

The grade of the lab will depend on the final presentation and how well the assigned task was solved (30/70).

Participants are expected to have Ubuntu Linux installed on their personal computers. The specific requirements for each project are quoted below.

The mandatory Introductory Meeting take place in person (see important dates below).

Semester:

SSYear:

2024Course Number:

MA-INF 4214Links:

BasisCourse Start Date:

16.04.2024Course End Date:

18.09.2024ECTS:

9Responsible HRL Lecturers:

Important dates:

All interested students have to attend the Introductory Meeting. In the Introductory Meeting, we will present the projects, the schedule, the registration process, and answer your questions.

| 16.04.2024, 10:30-11:30h (room 0.107) | Introductory Meeting (mandatory) [Slides] |

| 21.04.2024, Sunday | Registration deadline and topic selection on our website |

| 25.04.2024, Thursday | Registration deadline in BASIS |

25.07.2024, 10:00-11:00h (room 2.025) | Midterm lab presentation |

| 18.09.2024, 09:00-10:30h (room 0.027) | Lab presentation and deadline for lab documentation |

After the Introductory Meeting, each participant arranges an individual schedule with the respective supervisor.

Registration

Report template

Please use the following template for the written summary:

Report template

Projects:

Where is my stuff?

The task is to program a mobile robot to generate a map of the environment, and detect and mark objects of interest in it. Afterwards, you should plan and execute a path to go through all object location, detect whether they are still there, and report back the results.

Solving tangram puzzles

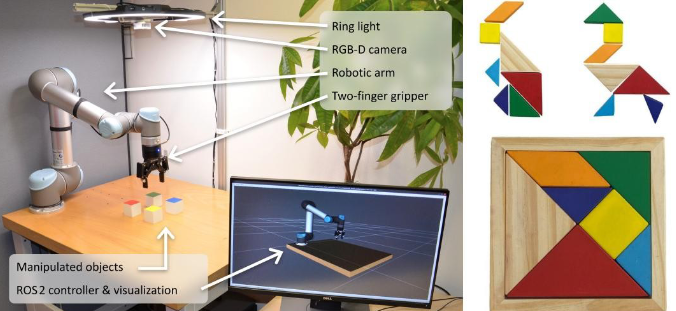

The goal of the project is to generate and assemble tangram puzzles [1] using a robotic arm with a 2-finger or suction gripper. The input for the generation is an image and the assembly sequence has to be determined to ensure a successful assembly execution.

Autonomous racing

The task is to learn a reinforcement learning agent to autonomously drive through a given race track. For simulation training we use the pybullet simulation given in [1]. After successfully training the agent, we deploy it on a real F1/tenth cart and drive a track in the hallway of our building.

[1] https://github.com/gonultasbu/racecar_gym-1

![]()

Find the viewpoint!

The task is to find the viewpoint of the given RGB image within the context of an eye-in-hand tabletop configuration.

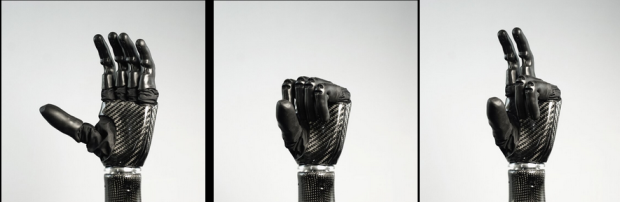

Rock, paper, scissors

The task is to implement a version of Rock Paper Scissors on our 5-finger Psyonic hand [1].

[1] https://www.psyonic.io/ability-hand

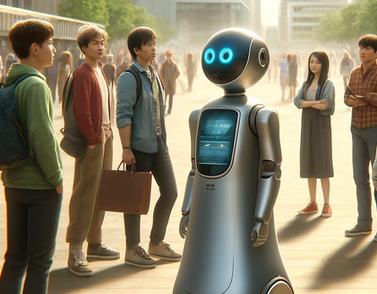

Social force model for crowd simulation

The task is to implement social force model (SFM) for crowd simulation and visualize it in a 3D environment.

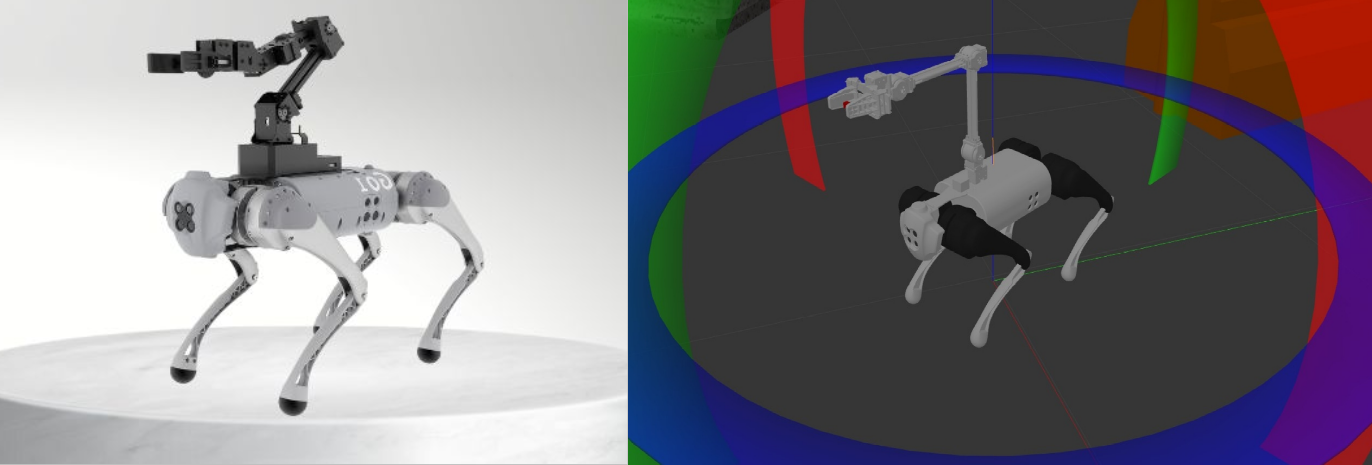

Manipulation with a quadruped robot

The project aims to develop an autonomous quadruped robotics system capable of performing mapping, navigation, object detection and manipulation.

Learning-based navigation among dynamic obstacles

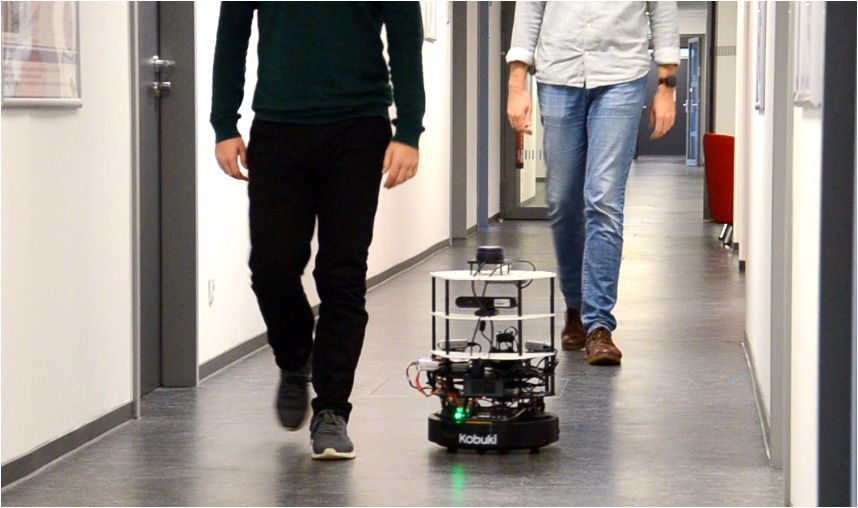

Using state-of-the-art deep reinforcement learning methods, you will teach a robot to navigate among static and dynamic obstacles. Here, a neural network is trained that directly controls the robot based on observations through a LIDAR sensor. You will design your own learning architecture that includes the network design for a solid understanding of the for the robot partially observable environment, and the reward function for informative and efficient learning of the navigation objectives. After the robot successfully has trained in Pybullet-based simulation, the goal is to transfer the policy onto a real robot using ROS.

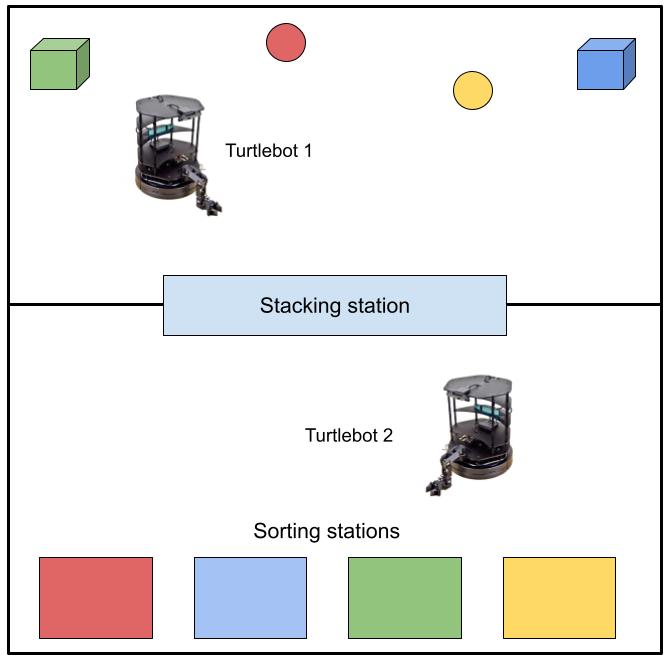

Collaborative stacking and sorting system with mobile robots

In this project, students will develop a collaborative system using two TurtleBots for stacking and sorting items. The first TurtleBot has to collect cubes and balls and stack them at the first station, while the second TurtleBot has to sort the stacked items by color into different stations.