Active Implicit Reconstruction Using One-Shot View Planning

Authors:

H. Hu, S. Pan, L. Jin, M. Popović, M. BennewitzType:

Conference ProceedingPublished in:

IEEE International Conference on Robotics and Automation (ICRA)Year:

2024Related Projects:

AID4Crops - Automation and AI for Monitoring and Decision Making of Horticultural CropsDOI:

https://doi.org/10.1109/ICRA57147.2024.10611542Links:

BibTex String

@inproceedings{hu2024icra,

title={Active Implicit Reconstruction Using One-Shot View Planning},

author={Hu, Hao and Pan, Sicong and Jin, Liren and Popovi{\'c}, Marija and Bennewitz, Maren},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

year={2024}

}

Abstract:

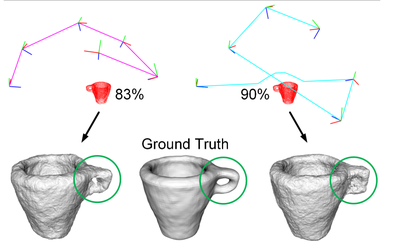

Active object reconstruction using autonomous robots is gaining great interest. A primary goal in this task is to maximize the information of the object to be reconstructed, given limited on-board resources. Previous view planning methods exhibit inefficiency since they rely on an iterative paradigm based on explicit representations, consisting of (1) planning a path to the next-best view only; and (2) requiring a considerable number of less-gain views in terms of surface coverage. To address these limitations, we integrated implicit representations into the One-Shot View Planning (OSVP). The key idea behind our approach is to use implicit representations to obtain the small missing surface areas instead of observing them with extra views. Therefore, we design a deep neural network, named OSVP, to directly predict a set of views given a dense point cloud refined from an initial sparse observation. To train our OSVP network, we generate supervision labels using dense point clouds refined by implicit representations and set covering optimization problems. Simulated experiments show that our method achieves sufficient reconstruction quality, outperforming several baselines under limited view and movement budgets. We further demonstrate the applicability of our approach in a real-world object reconstruction scenario.