Towards Rhino-AR: A System for Real-Time 3D Human Pose Estimation and Volumetric Scene Integration on Embedded AR Headsets

Authors:

L. Van Holand, N. Kaspers, N. Dengler, P. Stotko, M. Bennewitz, R. KleinType:

Conference ProceedingPublished in:

Accepted to: International Conference on Virtual Reality (ICVR)Year:

2025BibTex String

@inproceedings{holland2025icvr, title = {Towards {Rhino}-{AR}: A {System} for {Real}-{Time} 3D {Human} {Pose} {Estimation} and {Volumetric} {Scene} {Integration} on {Embedded} {AR} {Headsets}}, author = {Holland, Leif Van and Kaspers, Ninian and Dengler, Nils and Stotko, Patrick and Bennewitz, Maren and Klein, Reinhard}, booktitle = {International {Conference} on {Virtual} {Reality}}, year = {2025}, month = {5}, }

Abstract:

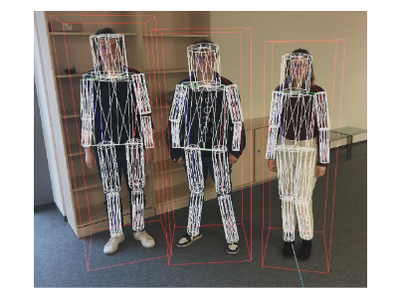

Real-time understanding of dynamic human pres-ence is crucial for immersive Augmented Reality (AR), yetchallenging on resource-constrained Head-Mounted Displays(HMDs). This paper introduces Rhino-AR, a pipeline for on-device 3D human pose estimation and dynamic scene integra-tion for commercial AR headsets like the Magic Leap 2. Oursystem processes RGB and sparse depth data, first detecting2D keypoints, then robustly lifting them to 3D. Beyond poseestimation, we reconstruct a coarse anatomical model of thehuman body, tightly coupled with the estimated skeleton. Thisvolumetric proxy for dynamic human geometry is then integratedwith the HMD’s static environment mesh by actively removinghuman-generated artifacts. This integration is crucial, enablingphysically plausible interactions between virtual entities andreal users, supporting real-time collision detection, and ensuringcorrect occlusion handling where virtual content respects real-world spatial dynamics. Implemented entirely on the MagicLeap 2, our method achieves low-latency pose updates (under 40ms) and full 3D lifting (under 60 ms). Comparative evaluationagainst the RTMW3D-x baseline shows a Procrustes-AlignedMean Per Joint Position Error below 140 mm, with absolutedepth placement validated using an external Azure Kinectsensor. Rhino-AR demonstrates the feasibility of robust, real-time human-aware perception on mobile AR platforms, enablingnew classes of interactive, spatially-aware applications withoutexternal computation.